When we talk about Large Language Models (LLM) in general or in the context of Retrieval Augmented Generation (RAG), we often come across use cases that primarily revolve around traditional Data Analytics/Science realm. We don’t see too many examples of LLM/RAG being utilized in the Networks and Unified Communications(UC/CC) technology space. The good news is that the OEMs have also realized it and have started to take initiatives especially in the SaaS space. However, we don’t need to wait for that to happen. We have ample tools available at our disposable to create our own custom solutions which can then, of course, be integrated with any native LLM derived product features, if we wished so. My primary objective through this series is to explain & demonstrate use cases where LLM/RAG can provide a better insight into day-to-day management of Networks and UC infrastructure.

Although, there is already a plenty of information available on LLMs and RAG on the internet for you to consume, I feel it is still important to have a quick primer here on what these technologies do at the basic level before we dive deeper into their practical use cases in the context of Networks and Unified Communications.

Basic LLM Workflow

A typical LLM workflow starts with us giving a prompt to the LLM and the LLM platform then uses one of its models to respond to that prompt to the best of its capability.

In the above example, a user wants to know how the REST API functionality can be used in a script in the Cisco UCCX based Contact Center environment. It’s a very simple and straightforward question but none of the LLM platforms that I tried was able to give me a coherent and relevant answer. I can think of a couple of reasons why it failed.

- One of the reasons could be that the model didn’t understand my prompt which I find unlikely because of the simple nature of the prompt.

- The second reason could be that there is not enough information pertaining to my question, available in the public domain which is what these public LLM models are mostly trained on.

For reference, I have provided brief snippets from 2 different LLM platforms (Open AI and Cohere) below and you can see that the output contains a lot of mumbo-jumbo about everything except the actual answer.

Cohere Output

Integrating a UCCX (Unified Communications Contact Center Express) script with other applications via a REST API involves a series of steps to ensure seamless communication and data exchange between the two systems. Here’s a detailed guide on how to achieve this integration:

Identify Integration Requirements:

- Determine the specific functionalities and data that need to be exchanged between the UCCX script and the external application. This could include call routing, agent management, customer data synchronization, or any other relevant processes.

Design API Endpoints:

- Define a set of REST API endpoints that correspond to the identified integration requirements. Each endpoint should have a unique URL and HTTP method (GET, POST, PUT, DELETE, etc.) associated with it.

- Document the API endpoints, including their URLs, parameters, request/response formats, and any authentication requirements. This documentation will serve as a reference for developers integrating with your UCCX script.

Implement API Endpoints:

- Develop the server-side logic to handle the API requests. This involves writing code that interacts with the UCCX script’s underlying system, such as Cisco Unified Communications Manager (CUCM) or other platforms, to perform the requested actions.

- Ensure that the API responses are structured in a standardized format, such as JSON or XML, to facilitate easy integration with the external application.

Authentication and Authorization:

- Implement secure authentication and authorization mechanisms to control access to the API. This could involve using OAuth 2.0, API keys, or other industry-standard authentication protocols.

- Define access control rules to determine which users, applications, or roles have permission to access specific API endpoints and perform certain actions.

Test and Debug:

- Conduct thorough testing of the API endpoints to ensure they function as intended and handle various scenarios.

- Debug any issues or errors that arise during the testing phase and make the necessary adjustments to the API implementation.

ChatGPT Output

Integrating a UCCX (Cisco Unified Contact Center Express) script with other applications via REST API involves making HTTP requests to external services from within the UCCX script. Cisco UCCX supports integrating with RESTful APIs using the HTTP Request step in its Call Studio scripting environment.

Steps to Integrate UCCX Script with REST API:

- Design the UCCX Script:

- In UCCX, scripts are designed using Cisco Unified CCX Editor (also called Call Studio). You can create scripts that handle call flows, decision trees, and interaction with external systems.

- Use the HTTP Request Step:

- UCCX scripts can send HTTP requests using the HTTP Request step to communicate with external REST APIs.

- This step allows you to make a GET, POST, PUT, or DELETE request to an external API and capture the response for use within the script.

- Set up the HTTP Request:

- In your UCCX script, add an HTTP Request step.

- You need to configure the following parameters:

- URL: The URL of the REST API endpoint you want to communicate with.

- Method: The HTTP method (GET, POST, PUT, DELETE) depending on the REST API you are interacting with.

- Headers: If required by the API, include any authentication or content-type headers (e.g.,

Authorization: Bearer <token>).- Request Body: If the request method is POST or PUT, you might need to include a body. Typically, the body will be in JSON format.

- Process the API Response:

- The response from the REST API is captured by the HTTP Request step.

- UCCX scripts can parse the response to extract relevant data, such as success or failure indicators, or any other data returned by the API.

- For example, if the API returns a JSON response, you can use a String Parser step to extract specific fields from the JSON.

- Error Handling:

- You should include error handling in the script to capture cases where the API request fails (e.g., network issues, invalid responses, or timeout errors).

- UCCX provides variables such as HTTP Status Code and HTTP Error Code to check if the request was successful.

Example of Using the HTTP Request Step in a UCCX Script:

- Setup the HTTP Request Step:

- URL:

https://api.example.com/submitTicket- Method:

POST- Headers:

Authorization: Bearer <token>Content-Type: application/json- Request Body:

{ "customer_id": "{CustomerID}", "issue_description": "{IssueDescription}" }- Process the Response:

- Response from the API could be:

{ "status": "success", "ticket_id": "12345" }- In the script, you can extract values from this response to use later. For example, capture the

ticket_idinto a variable.- Handling Response in the Script:

- Use the String Parser to parse the

ticket_idfrom the response.- You can then use this

ticket_idin further steps of the script, such as sending an email, logging the ticket, or updating a database.- Error Handling:

- Check the HTTP Status Code. If it’s not

200 OK, you can log the error or take other action (e.g., retrying the request).By using these steps, you can integrate UCCX scripts with external REST APIs, enabling dynamic interaction with external systems such as CRMs, databases, ticketing systems, or any other third-party services that expose REST APIs.

What is RAG and how does it help ?

As we saw in the previous section, the public LLM models were completely inept in assisting us with a topic which is specific to our operations environment even though the prompt was very generic. That’s why it doesn’t always makes sense to rely on LLMs completely. Thankfully, we do have a solution which can exploit the LLMs capability and yet not throw a crappy response. That solution comes in the form of RAG. RAG stands for Retrieval-Augmented Generation. It is a technique that combines the power of retrieval-based systems (like external KBs, Databases, APIs etc) with the capabilities of LLM models to enhance the performance and accuracy of language-based tasks. In simple terms, we are asking the LLM model to use a custom repository of data (like internal KBs/SOPs/Documentation etc) to formulate its response instead of relying on the public data which it has used to train itself on.

As demonstrated in the above example, there are scenarios where complete reliance on a public LLM will not be of much use. Having said that, it doesn’t mean that we should devoid ourselves from exploiting powerful capabilities of LLMs. But it needs to be done in a smarter way and that’s where additional tools like RAG can come in handy.

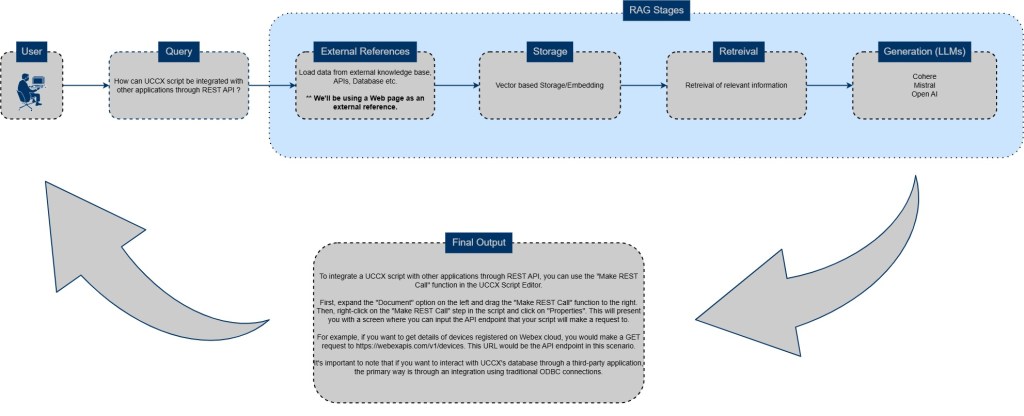

In the case of RAG, we add an additional step in the middle of a typical LLM workflow. That step relies on an external datasource to find relevant information to our query and feeds that information to the LLM in the final step where this information is combined with the LLM’s own capability to formulate the final response. This external datasource could be a KB article or a response from another API or some database for that matter.

In this RAG driven example, we have issued the same prompt but instead of sending that prompt directly to the LLM, we feed it through multiple steps which form the core of the RAG process. As a result, we get a much better and coherent response to our query as can be seen in the “Final Output” step. Please click on the image to see it in full scale.

I’ve outlined some of the common reasons why RAG needs to be a part of our strategy if we plan to utilize LLMs in our operational workflows.

- Contextual Information Retrieval: RAG allows LLMs to retrieve relevant information from external data sources during the retrieval process. This means that the model can access and incorporate up to date domain specific knowledge that might not be present in its training data. By retrieving and integrating this external information, LLMs can provide more accurate and contextually relevant responses. This is evident from the example I have provided above.

- Improved Factual Accuracy: One of the challenges with LLMs is the potential for generating factually incorrect or outdated information, especially when dealing with rapidly evolving domains. RAG addresses this issue by enabling the model to consult reliable sources and verify the accuracy of its responses. This ensures that the generated content is based on factual evidence, enhancing the overall trustworthiness of the LLM.

- Enhanced Personalization: RAG can be tailored to individual users or specific use cases. By integrating user specific data or domain relevant information into the retrieval process, LLMs can generate more personalized and tailored responses.

- Dynamic Knowledge Base: RAG allows for the continuous updating of the LLM’s knowledge base. As new information becomes available, it can be easily incorporated into the retrieval process, ensuring that the model remains up-to-date and adaptable.

- Reduced Hallucinations: Hallucinations or the generation of false or misleading information are a common concern with LLMs. RAG can mitigate this issue by cross-referencing the model’s output with reliable sources, thus reducing the likelihood of generating false statements.

I hope you found this post helpful to decipher what role RAG plays in the LLM realm and you are now excited to see it being used to simplify some of the common use cases in the Network and Unified Communications technology space.

Please feel free to drop your feedback/suggestions, if any. Until then, Happy Learnings!!