In the last post, we went over a basic introduction on Retrieval Augmented Generation (RAG) and their usability in resolving real-world use cases in conjunction with LLMs (Large Language Models). In this post, we are going to take it further by working through an example.

The last post dealt with a scenario where we wanted to understand how a UCCX Script can be integrated with other applications through REST API. The traditional approach of using LLMs wasn’t of much help, as demonstrated by the incoherent responses we received from different LLMs, which lacked the specificity and clarity needed for practical application in complex environments. However, by introducing RAG into the picture, we significantly improved the quality of the responses we were able to conjure up. RAG’s ability to fetch relevant information from external sources in real-time and then synthesizing that information with generative capabilities offered by LLMs, helps us to create a more cohesive and contextually accurate interaction.

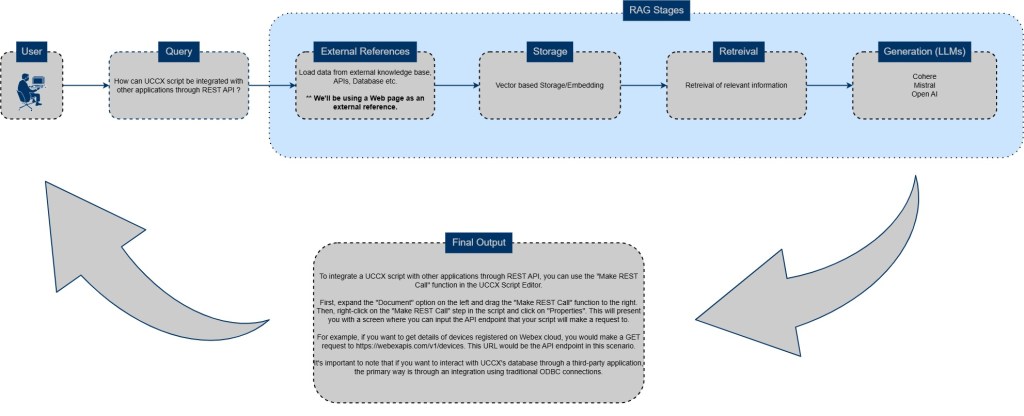

Example Workflow

I’ve reproduced the RAG-LLM workflow here for your reference.

Embeddings

One of the key actions performed within the RAG process is called “Embedding“. Embedding basically represents the external input sources (like SOPs, internal documentation, KB articles etc) as vectors in a high-dimensional space. In simpler terms, it basically stores the data chunks that have been created as a result of splitting the said documents into multiple pieces. For example, a document has 2000 lines and we want to search for something within that document. In this case, splitting it into multiple chunks (may be 4 chunks of 500 lines each) & searching within each chunk separately would be much faster than searching in one single document containing 2000 lines. This was just a small hypothetical example. In a real world, this sort of search may span across much larger documents or even multiple documents, for that matter.

Since we now understand what embedding is and what are the different ways in which external reference documents can be split and fed into vector storage spaces aka Embeddings, the question now comes to mind is what sort of external reference material can be used as an input to the larger LLM model. In our example, both the embedding and the final LLM are from Cohere. I’ve been working with Cohere models for more than 6 months and I love the way they perform. Also, I believe Cohere is the only company that offers an Embedding model as a part of their free tier. Others like Open AI and Mistral do not offer free Embeddings models.

Sample Code

Here is a sample Python code that uses a popular framework called Langchain and Cohere LLM. It uses a web page as a source document in conjunction with traditional LLM to formulate the final response.

#1 embeddings2 = CohereEmbeddings(cohere_api_key=cohere_api_key,

model="embed-english-v3.0")

#2 llm = ChatCohere(cohere_api_key=cohere_api_key,

model="command-r-08-2024",

temperature=0)

#3 user_query_second = "How can UCCX script be integrated with other application through REST API ? "

#4 loader = WebBaseLoader("https://learnuccollab.com/2023/08/05/cisco-uccx-with-

rest-api-integration-unlocking-powerful-automation/")

docs = loader.load()

#5 text_ACL = CharacterTextSplitter(chunk_size=1000, chunk_overlap=0)

doc_ACL = text_ACL.split_documents(docs)

#6 db_ACL = Chroma.from_documents(doc_ACL, embeddings2)

input_docs_ACL = db_ACL.as_retriever().invoke(user_query_second)

#7 rag = CohereRagRetriever(llm=llm)

docs_ACL = rag.invoke

(

user_query_second,

documents=input_docs_ACL,

)

ans_ACL = docs_ACL[-1].page_content

print(ans_ACL) Let’s go through the code step by step and I would suggest you to corelate this with the flowchart given above.

- We start off by defining the Embeddings model. In this case, we are using Cohere’s embed-english-v3.0 model.

- We then define the final LLM that will formulate the final response to our query. Here, we are using Cohere’s LLM command-r-08-2024.

- We then use one of the “loaders”. As the name suggests, there are different ways to load an external document as a reference material which will eventually be fed into the LLM. It is this document which is split into chunks and those chunks then go through the embeddings phase. In this particular case, we are using one of Langchain’s methods called “WebBaseLoader” which can take any web document as a reference. I am using one of my earlier blog posts that explains how UCCX scripts can be made to talk with other applications via REST APIs.

- We then use another Langchain method called “CharacterTextSplitter” to define how the source document needs to be split followed by the actual process of creating chunks.

- We then feed those chunks to Chroma DB which is one of the popular vector stores and we process them using Cohere’s embedding model.

- We then run our query (“How can UCCX script be integrated with other application through REST API ? “) against the previous step.

- We then send the output of the previous step which by now, includes the answer to our query, to the main LLM which will formulate the final response.

The above program throws the following output. As can be seen, this output is much better and coherent than what we would generally get directly from an LLM. You can refer to my previous post here to see how random & incoherent the responses were from public LLMs without the involvement of RAG.

To integrate a UCCX script with other applications through a REST API, you can use the “Make REST Call” function in the UCCX Script Editor.

First, expand the “Document” option on the left and drag the “Make REST Call” function to the right. Then, right-click on the “Make REST Call” step in the script and click on “Properties”. This will present you with a screen where you can input the URL, which is the API endpoint that your script will make a request to.

For example, if you want to get details of devices registered on Webex cloud, you would make a GET request to https://webexapis.com/v1/devices. This would be the URL in this scenario.

UCCX also has the capability to offer an IVR-based system to callers, where they can provide DTMF inputs while the script captures that data and interacts with other third-party applications over REST APIs in real-time.

This is the advantage of using RAG. It’s not always perfect and definitely is not applicable to all use cases. But it’s much better and more advantageous than completely relying on LLMs which are trained solely on publicly available data especially in scenarios where majority of your use cases are dependent upon propreitary non-public data.

I hope this post helped you increase your curiosity in this fascinating world of LLMs/Gen AI/RAG. I will be adding more such material in the coming weeks with practical examples to handle real world operational use cases. Until then, Happy Learnings!!